Bernstein Virtual Reality Facility

Despite being multimodally-driven the neural representation and processing of space and time is traditionally investigated separately for different sensory systems. This is mainly due to the fact that until recently multimodal stimulation was hard to accomplish. New developments that make use of computer-generated environments help to overcome these limitations. In such virtual realities it is possible to simultaneously present, e.g., accoustic and visual stimuli. Moreover, virtual reality systems help to overcome the spatial restrictions of typical lab rooms and hence enable experiments with more comprehensive and natural stimulus environments.

Funded by the BMBF a facility for experiments with virtual reality (VR) was installed at the Bernstein Center Munich. The Bernstein Virtual Reality Facility consists of two independent setups, each customized for the generation and presentation of virtual environments to either humans or rodents. Each setup allows the presentation of a visual as well as an acoustical virtual environment that can be linked to the actual movement of the individual inside the virtual environment. An identical software layout for both setups ensures that every audio-visual virtual environment implemented for one of the setups can also be used in the other.

Human Virtual Reality

On the human virtual reality setup the visual virtual environment (VE) is presented via a high-resolution stereoscopic head-mounted display, the acoustic VE is implemented by means of an ambisonic free-field sound scene played back via a loudspeaker array. The test subjects are located in a motorized swivel chair, allowing the use of angular vestibular stimulation. The test subjects can interact with the VE through motion tracking and a joystick mounted onto the swivel chair. Additionally, a 128 channel EEG recording unit is available for usage in combination with the behavioral/psychophysical experiments. All modules of the VR setup (visual, acoustic, vestibular) are independently applicable, allowing a multitude of psychophysical experiments.

|

|

|---|---|

Bernstein projects that used the Human VR setup

Project B3 – Auditory invariance against space-induced reverberation. Lutz Wiegrebe (LMU), Benedikt Grothe (LMU), and Christian Leibold (LMU)

Project B5 – Temporal aspects of spatial memory consolidation. Steffen Gais (LMU), Stefan Glasauer (LMU), and Christian Leibold (LMU)

Project B-T3 – Proprioceptive stabilization of the auditory world. Paul MacNeilage (LMU), Uwe Firzlaff (TUM), and Lutz Wiegrebe (LMU)

Publications

Binaural Glimpses at the Cocktail Party? JARO (2016). doi:10.1007/s10162-016-0575-7

Ludwig Wallmeier, Lutz Wiegrebe: Ranging in Human Sonar: Effects of Additional Early Reflections and Exploratory Head Movements. PLoS ONE 9(12): e115363. doi:10.1371/journal.pone.0115363

, Self-motion facilitates echo-acoustic orientation in humans.

Rodent Virtual Reality

|

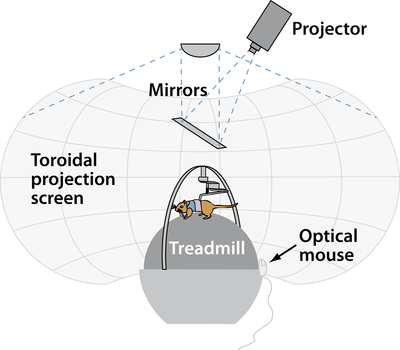

The centerpiece in the rodent virtual reality is a modified Kramer-sphere, which allows the animal to move while keeping its actual position constant. The animal is positioned on the sphere with the help of a custom-made harness that leaves head and legs freely movable. Forward and backward movements of the animal induce rotations of the sphere similar to a treadmill. The speed and direction of the rodent’s locomotion are registered by two sensors (conventional optical USB computer mice) and fed into the computer that generates the visual VR. The visual presentation of the virtual environment in the rodent VR is done via projection onto a spherical canvas enclosing the Kramer-sphere. The fixed positioning of the rodent while navigating the virtual environment allows simultaneous electrophysiological recording while the animals perform behavioral and/or psychophysical tasks. Contact |

|

|

|

Figure: Overview of the rodent virtual realtity setup |

Bernstein projects that used the Rodent VR setup

- Project A3 – New methods to study visual influences on auditory processing. Benedikt Grothe (LMU), Andreas Herz (LMU), Christian Leibold (LMU), and Harald Luksch (TUM)

- Project B-T6 - Influence of sensory conditions on the hippocampal population code for space. Kay Thurley (LMU), Christian Leibold (LMU), Anton Sirota (LMU)

Publications

Thurley K and Ayaz A (2016) Virtual reality systems for rodents. Current Zoology

Kautzky M and Thurley K (2016) Estimation of self-motion duration and distance in rodents. R. Soc. open sci. 3: 160118.

Garbers C; Henke J; Leibold C; Wachtler T; Thurley K (2015) Contextual processing of brightness and color in Mongolian gerbils. Journal of Vision (2015) 15:1 pii: 13. doi: 10.1167/15.1.13

Thurley K; Henke J; Hermann J; Ludwig B; Tatarau C; Wätzig A; Herz A; Grothe B; Leibold C (2014) Mongolian gerbils learn to navigate in complex virtual spaces. Behav Brain Res (2014) 266: 161-168