Human Virtual Reality

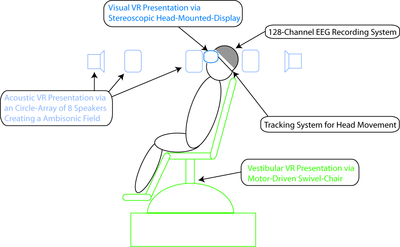

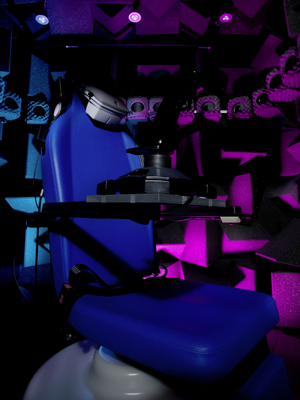

On the human virtual reality setup the visual virtual environment (VE) is presented via a high-resolution stereoscopic head-mounted display, the acoustic VE is implemented by means of an ambisonic free-field sound scene played back via a loudspeaker array. The test subjects are located in a motorized swivel chair, allowing the use of angular vestibular stimulation. The test subjects can interact with the VE through motion tracking and a joystick mounted onto the swivel chair. Additionally, a 128 channel EEG recording unit is available for usage in combination with the behavioral/psychophysical experiments. All modules of the VR setup (visual, acoustic, vestibular) are independently applicable, allowing a multitude of psychophysical experiments.

|

|

|---|---|

Contact

Bernstein projects that currently use the Human VR setup

Project B3 – Auditory invariance against space-induced reverberation. Lutz Wiegrebe (LMU), Benedikt Grothe (LMU), and Christian Leibold (LMU)

Project B5 – Temporal aspects of spatial memory consolidation. Steffen Gais (LMU), Stefan Glasauer (LMU), and Christian Leibold (LMU)

Project B-T3 – Proprioceptive stabilization of the auditory world. Paul MacNeilage (LMU), Uwe Firzlaff (TUM), and Lutz Wiegrebe (LMU)

Publications

Binaural Glimpses at the Cocktail Party? JARO (2016). doi:10.1007/s10162-016-0575-7

Ludwig Wallmeier, Lutz Wiegrebe: Ranging in Human Sonar: Effects of Additional Early Reflections and Exploratory Head Movements. PLoS ONE 9(12): e115363. doi:10.1371/journal.pone.0115363

, Self-motion facilitates echo-acoustic orientation in humans.